Beginners Exploring KONG: I’m That Beginner

Assalamualaikum warahmatullahi wabarakatuh, hello my tech fellas in this article i will try again to practice my English writing skills before this article I’m already writing about Security Issue that could be happened at default installation of KONG but as a Penetration Tester I’m not familiar enough with KONG because last time i do full time web application development is around 2021 and I’m using well known technologies like Apache2, Nginx and PHP for the web server and web service.

But after doing full time penetration testing in 2021, i faced so many clients with different tech stack, and lately at 2024 there’s so many response http header with identifiers “server” returned KONG, so on this article as a Beginners in Web Application Engineering i will exploring what ability of the KONG, why nowadays people use this rather than nginx, what the main features of KONG so make people use this.

Phase #1 Development REST API

package main

import (

"encoding/json"

"fmt"

"log"

"math/rand"

"net"

"net/http"

"os"

)

type WeatherResponse struct {

City string `json:"city"`

Weather string `json:"weather"`

Temp string `json:"temp"`

}

var cities = []string{

"Jakarta", "Sidoarjo", "Surabaya", "Bandung", "Semarang", "Yogyakarta", "Surakarta", "Malang", "Denpasar", "Mataram", "Kupang", "Pontianak", "Banjarmasin", "Samarinda", "Palembang",

}

func getCuaca(w http.ResponseWriter, r *http.Request) {

city := r.URL.Query().Get("city")

if city == "" {

city = cities[rand.Intn(len(cities))]

}

response := WeatherResponse{

City: city,

Weather: "Panas",

Temp: "30°C",

}

w.Header().Set("Content-Type", "application/json")

json.NewEncoder(w).Encode(response)

}

func getPing(w http.ResponseWriter, r *http.Request) {

hostname, err := os.Hostname()

if err != nil {

log.Printf("Error getting hostname: %v", err)

http.Error(w, "Unable to get hostname", http.StatusInternalServerError)

return

}

addrs, err := net.InterfaceAddrs()

if err != nil {

log.Printf("Error getting IP addresses: %v", err)

http.Error(w, "Unable to get IP address", http.StatusInternalServerError)

return

}

var ip string

for _, addr := range addrs {

if ipNet, ok := addr.(*net.IPNet); ok && !ipNet.IP.IsLoopback() && ipNet.IP.To4() != nil {

ip = ipNet.IP.String()

break

}

}

if ip == "" {

ip = "Unknown IP"

}

response := fmt.Sprintf("Halo dari hostname: %s dengan IP: %s", hostname, ip)

w.Header().Set("Content-Type", "text/plain")

w.Write([]byte(response))

}

func main() {

http.HandleFunc("/cuaca", getCuaca)

http.HandleFunc("/ping", getPing)

port := ":8080"

fmt.Printf("Server running on port %s\n", port)

log.Fatal(http.ListenAndServe(port, nil))

}

So the idea of the simple REST API is to demonstrate the main features that offered by KONG Gateway, such like: (but actually there’s more than that)

- Caching

- Load Balancing

- Rate Limiting

All this three, as far i know could be implemented in Nginx the old technologies that I’m familiar with but yeah let’s compare it maybe? so the beginners ( ofc it’s me ) could decide which one is better for my futures project. Okay back to the REST API idea there’s 2 endpoint created one is “cuaca” and “ping” the “cuaca” is returning random cities if there’s no “city” query parameter supplied, if supplied return the “city” query it self, if there’s no supply just randomize the list of city, the “ping” endpoint will returning “pong” and hostname also the ip of the current instance that the application running, it’s for what? for Load Balancing proof of concepts.

Reverse Proxy Load Testing

So the purpose of doing Load Testing here is want to check the actual response time, how many request that server could receive and something related to answer my question list:

- Is serving golang http raw have a better performance?

- Or serving golang http behind KONG is have a better performance?

- Or serving behind nginx reverse proxy is much better?

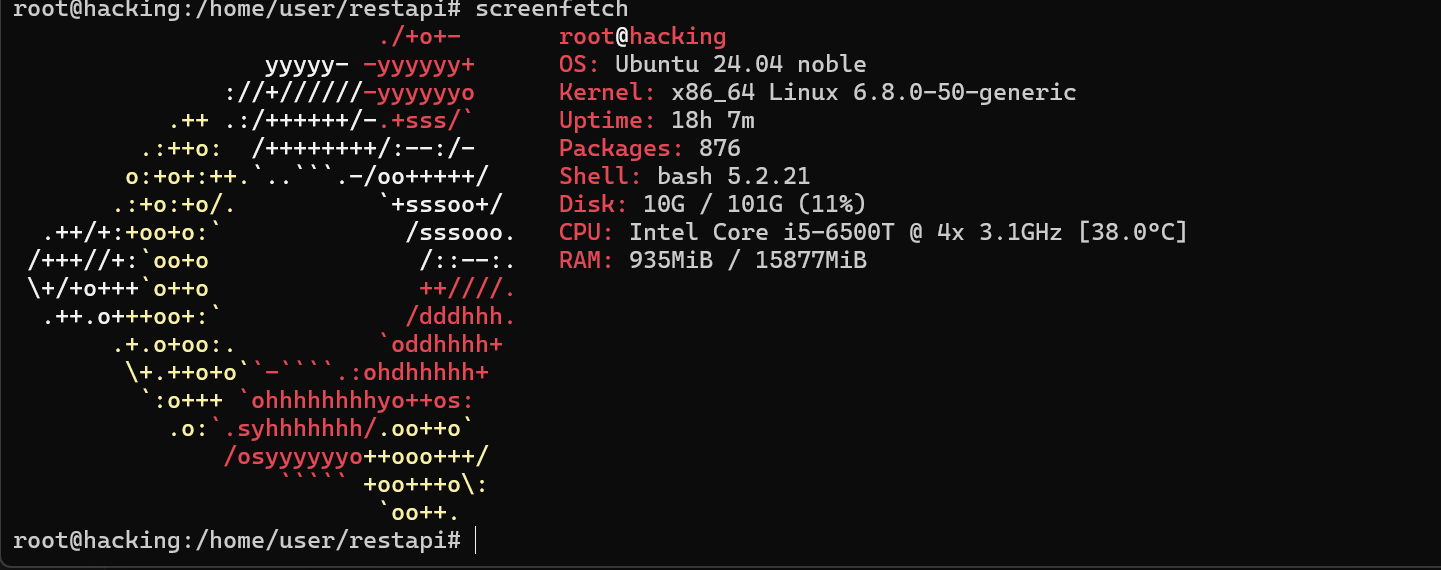

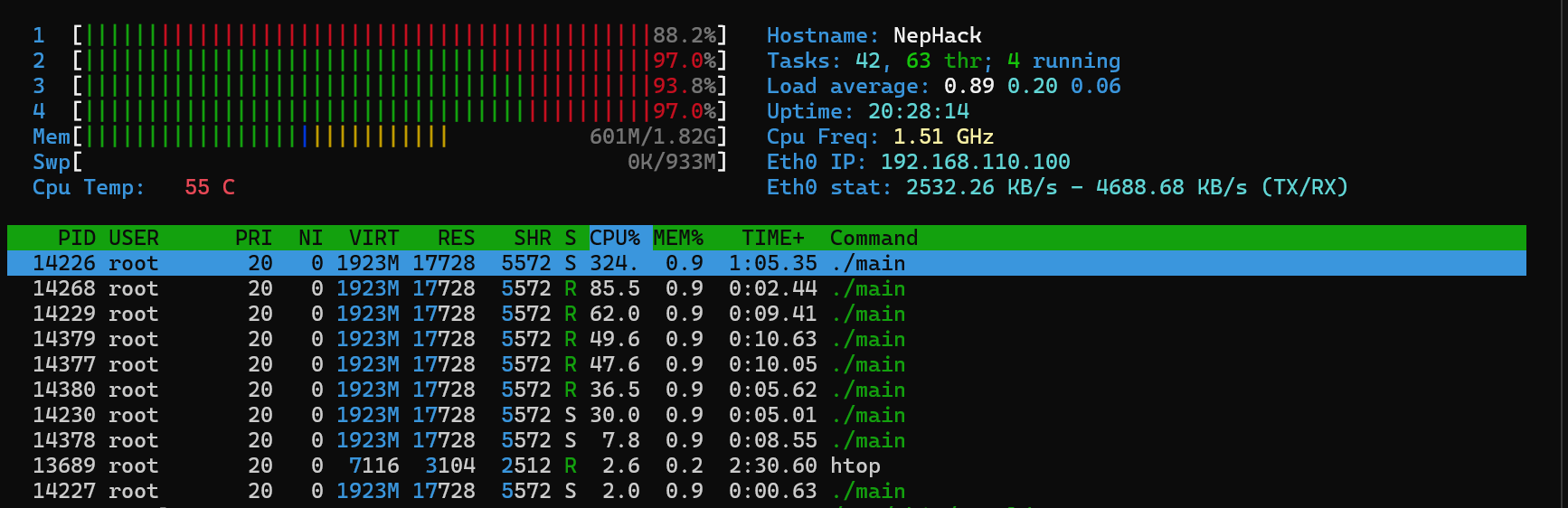

All the question could be answering with the proof of the testing it self, so i have the data for answering my question, the data testing could be have different results if you and me doing same test, maybe hardware factor or something others unusual things, so for make it clear my servers for running KONG and the Golang Application is here the specification:

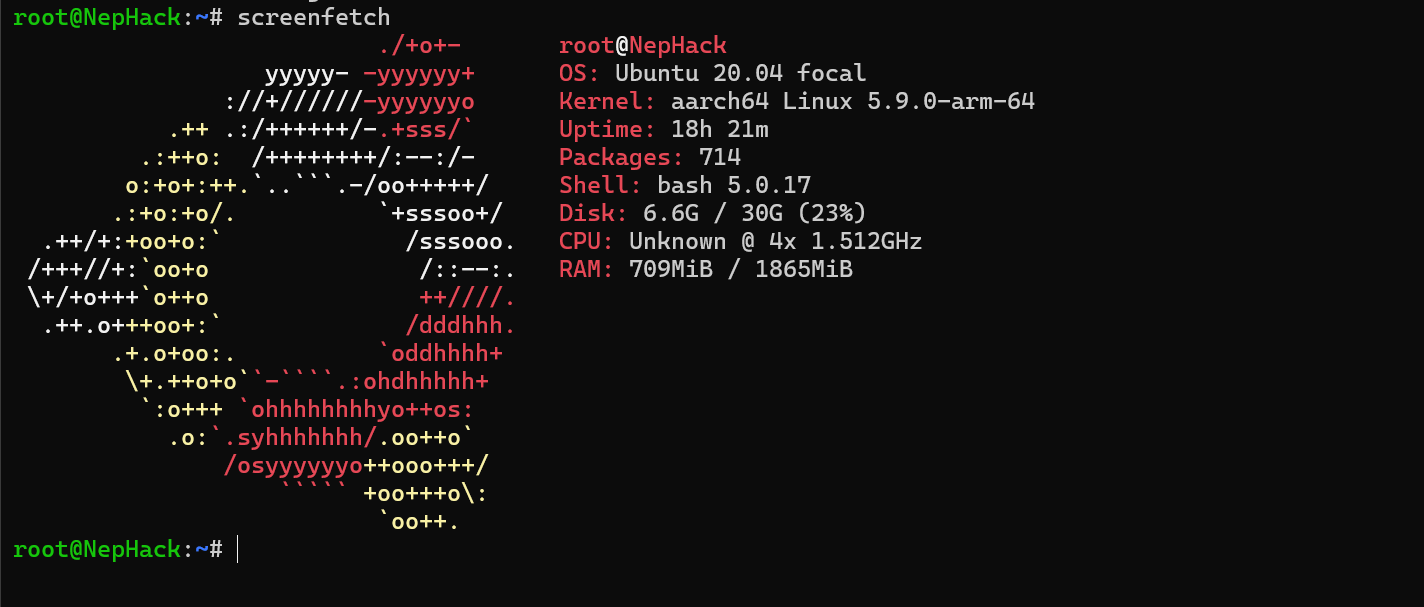

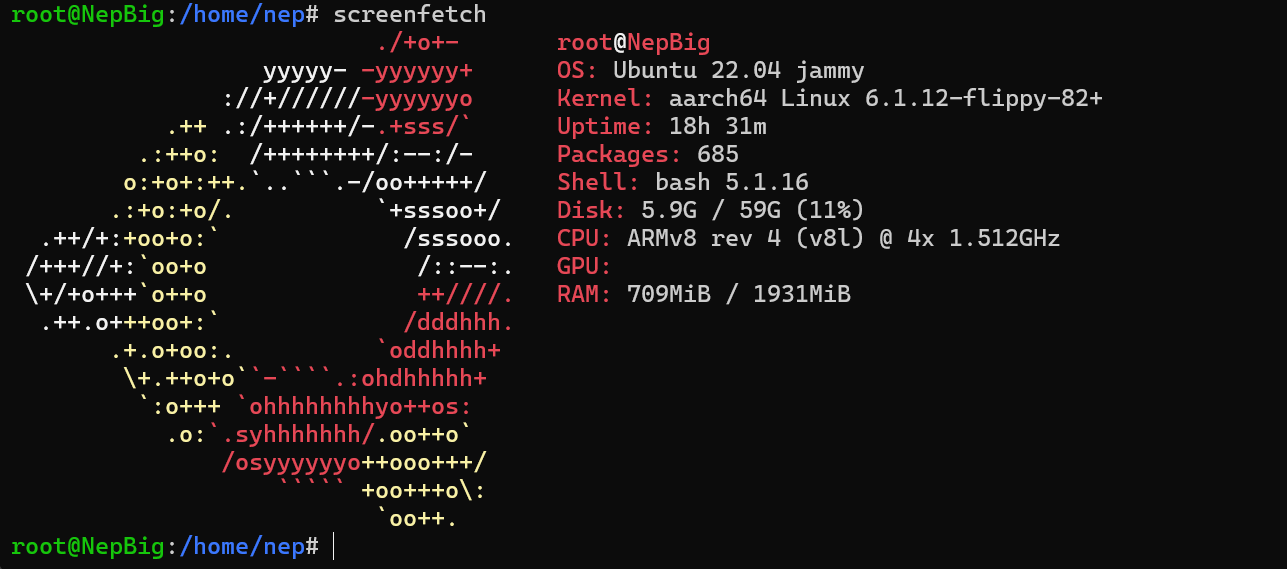

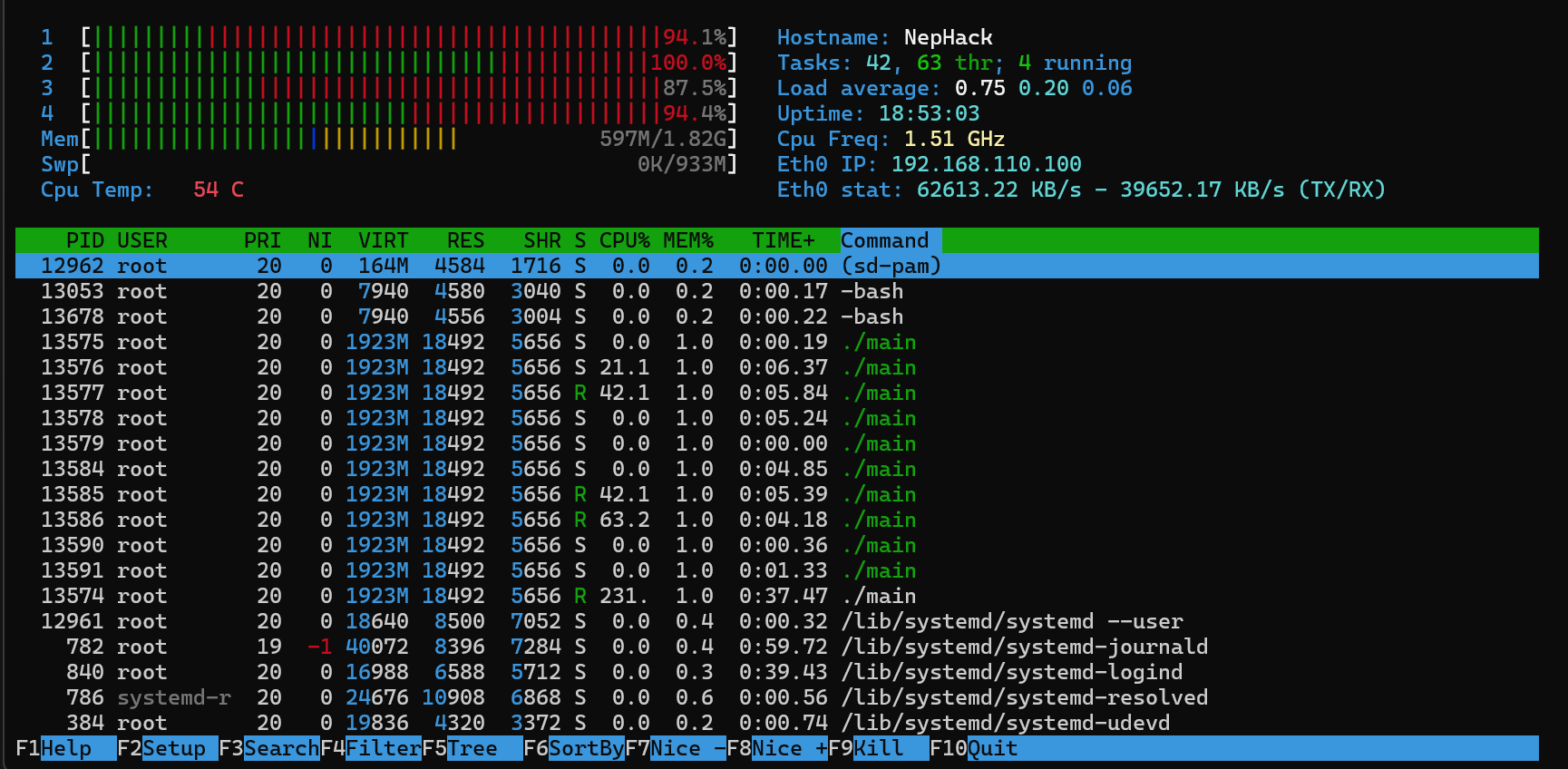

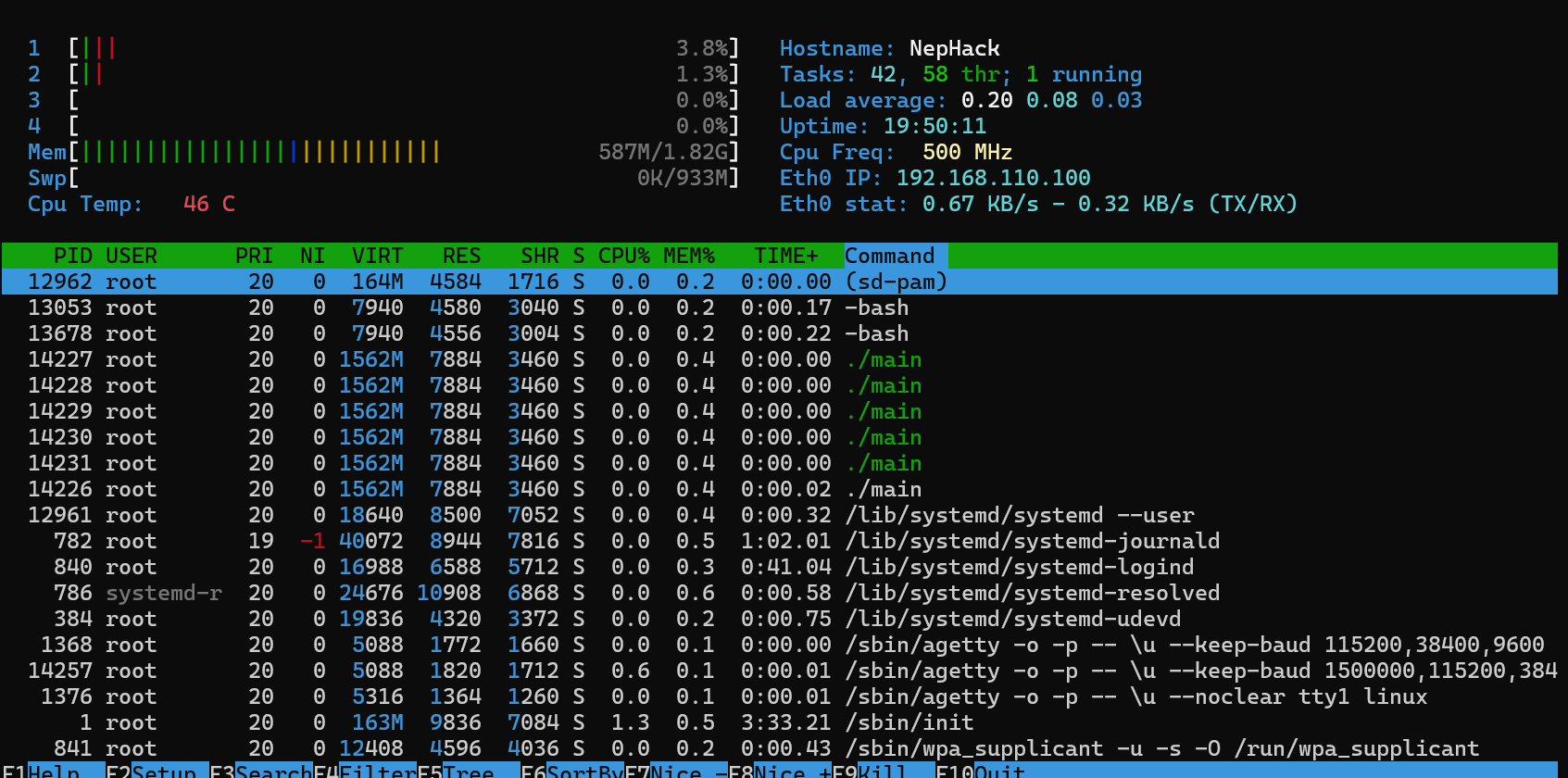

and for the another instance demonstrate load balancing I’m using 2 of instance with this specification running on STB Rooted with Armbian OS:

and

Detailed

| No | OS | CPU | RAM | STORAGE |

|---|---|---|---|---|

| 1 (main server) | Ubuntu 24 | Intel Core I5-6500T @ 4x 3.1 GHz | 16 GB | 120GB SSD |

| 2 (second instance) | Ubuntu 20 | ARMv8 rev 8 (v8l) @ 4x 1.512 GHz | 2 GB | 64GB Micro SD |

| 3 (third instance) | Ubuntu 22 | ARMv8 rev 8 (v8l) @ 4x 1.512 GHz | 2 GB | 32GB Micro SD |

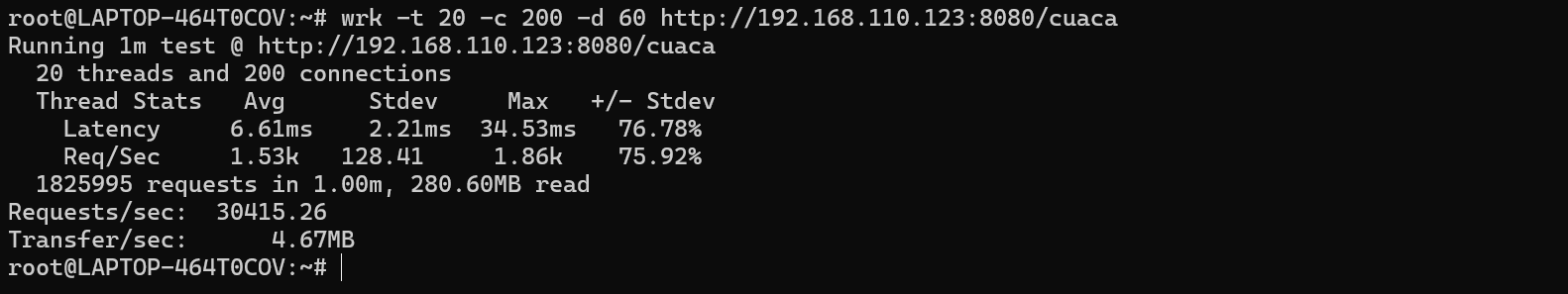

Methodology of Load testing will use tools called wrk with thread -t 20 also many connection is -c 200 and time of testing is -d 60 which is 1 minutes, so simply in term of human language i will send 200 connection with 20 threads in 1 minutes.

Load Testing on HTTP Serve by Golang

-

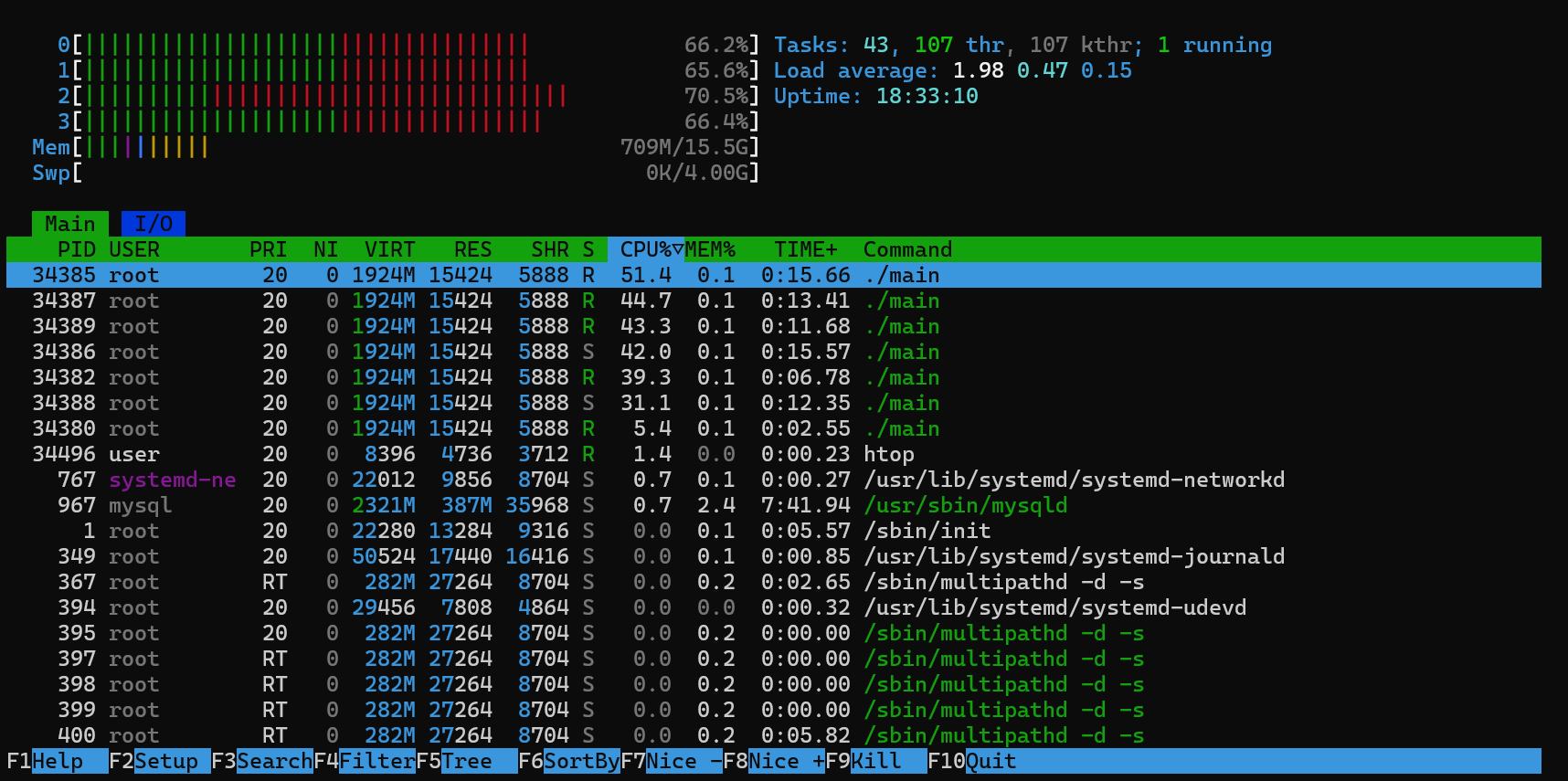

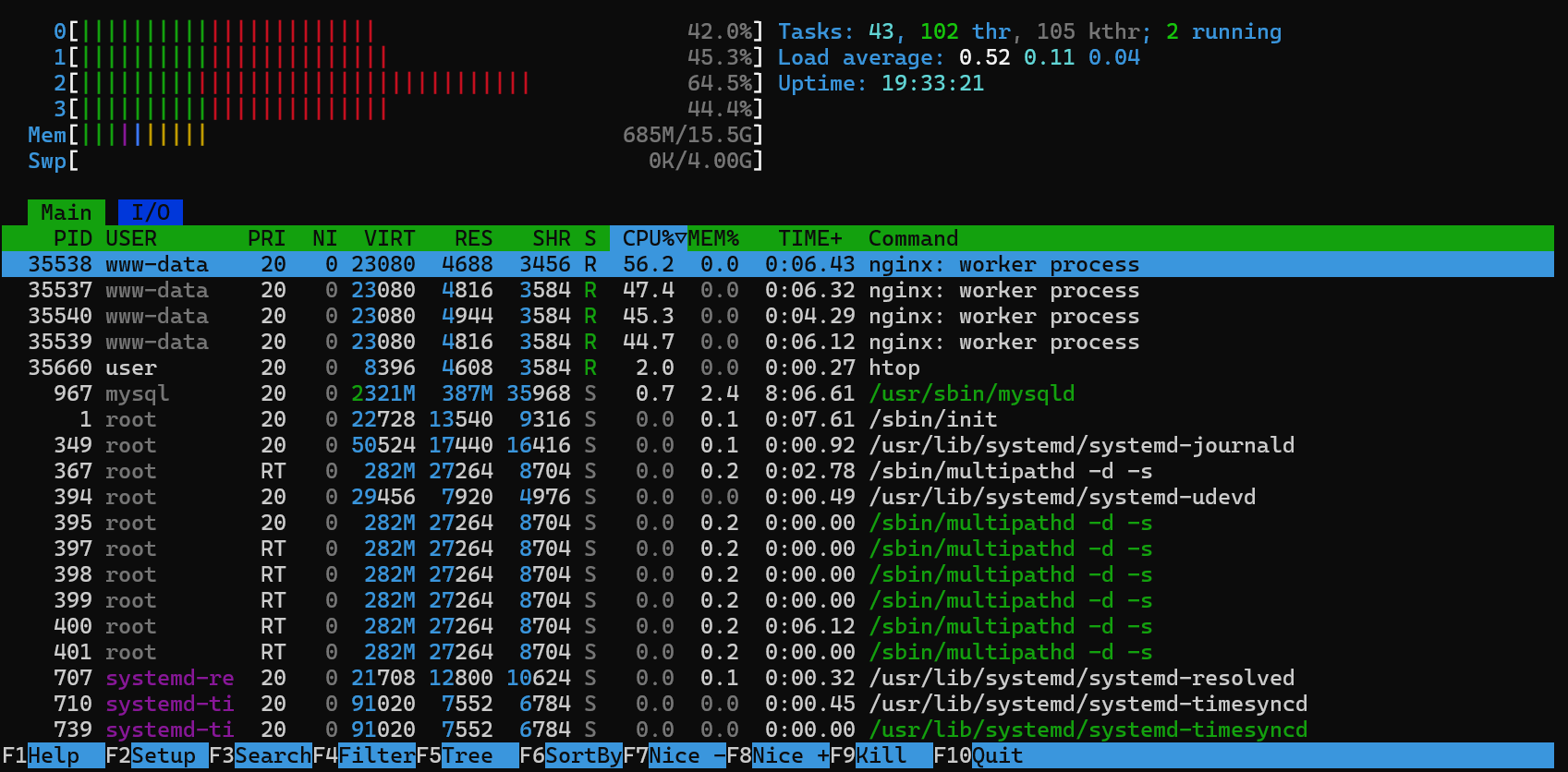

Main server

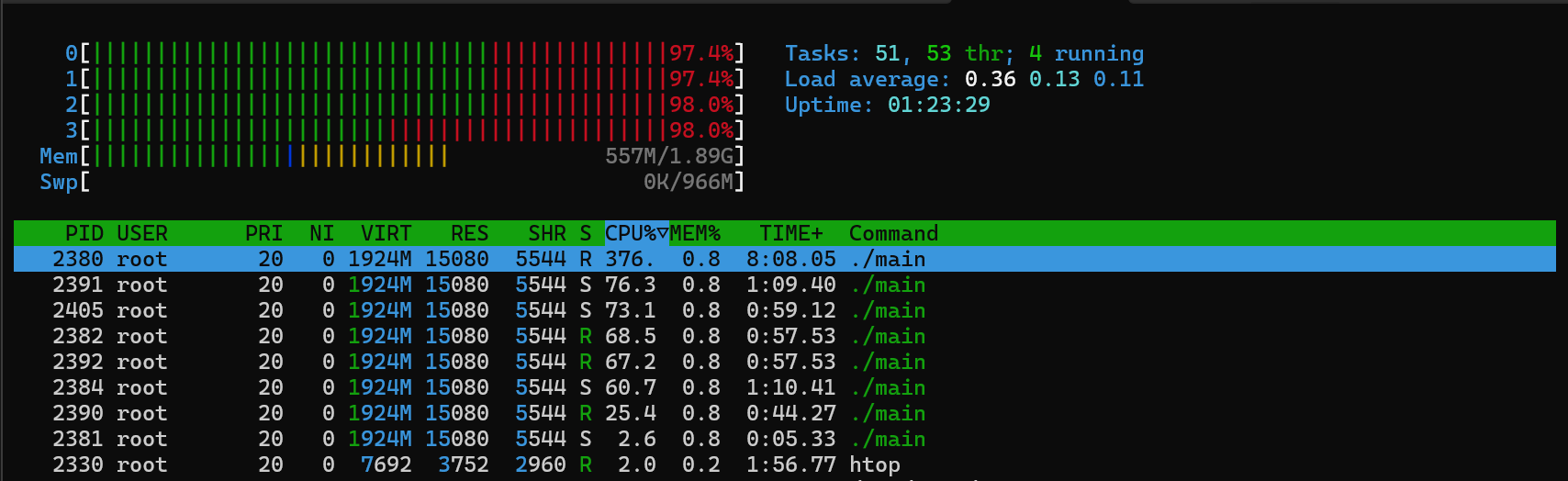

HTOP

random cities

Running 1m test @ http://192.168.110.123:8080/cuaca 20 threads and 200 connections Thread Stats Avg Stdev Max +/- Stdev Latency 6.61ms 2.21ms 34.53ms 76.78% Req/Sec 1.53k 128.41 1.86k 75.92% 1825995 requests in 1.00m, 280.60MB read Requests/sec: 30415.26 Transfer/sec: 4.67MB

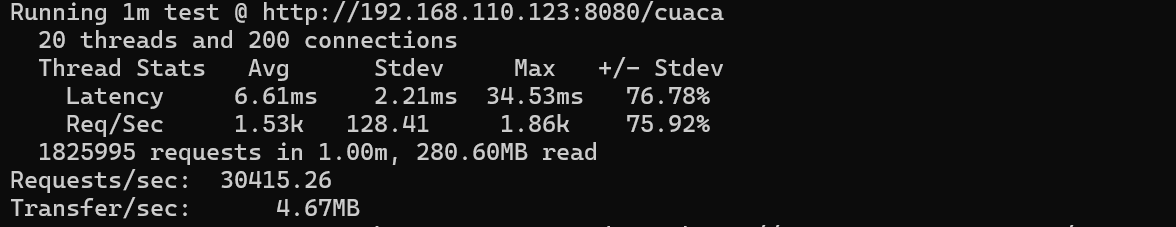

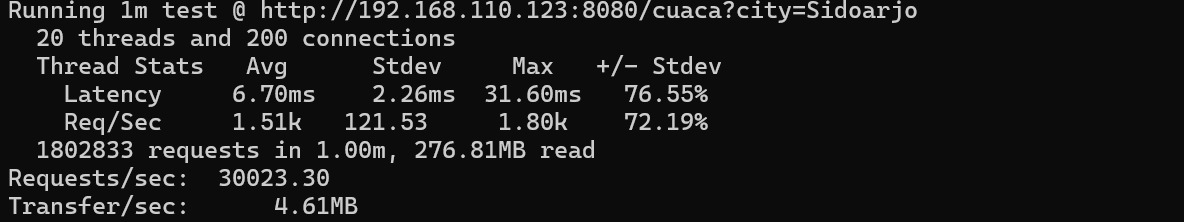

fixed cities

Running 1m test @ http://192.168.110.123:8080/cuaca?city=Sidoarjo

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 6.70ms 2.26ms 31.60ms 76.55%

Req/Sec 1.51k 121.53 1.80k 72.19%

1802833 requests in 1.00m, 276.81MB read

Requests/sec: 30023.30

Transfer/sec: 4.61MB-

Second Instance

HTOP

random cities

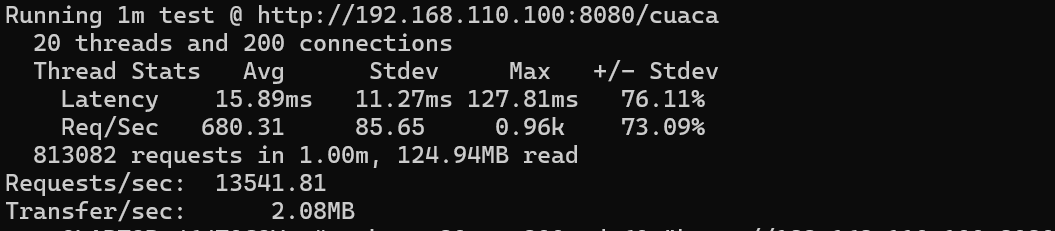

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 15.89ms 11.27ms 127.81ms 76.11%

Req/Sec 680.31 85.65 0.96k 73.09%

813082 requests in 1.00m, 124.94MB read

Requests/sec: 13541.81

Transfer/sec: 2.08MBfixed cities

Running 1m test @ http://192.168.110.100:8080/cuaca?city=Sidoarjo

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 16.23ms 11.40ms 142.24ms 74.57%

Req/Sec 664.41 79.52 0.98k 73.72%

794036 requests in 1.00m, 121.92MB read

Requests/sec: 13222.44

Transfer/sec: 2.03MB-

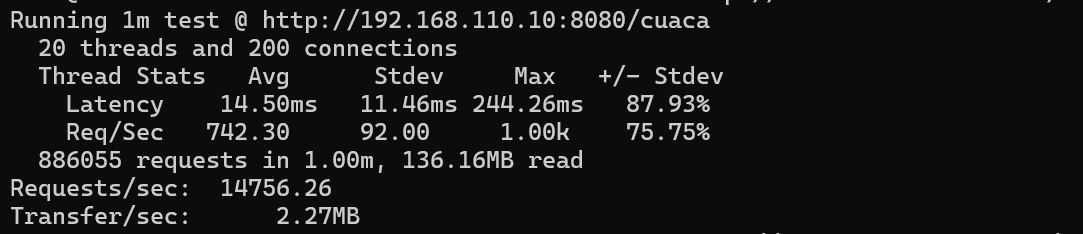

Third Instance

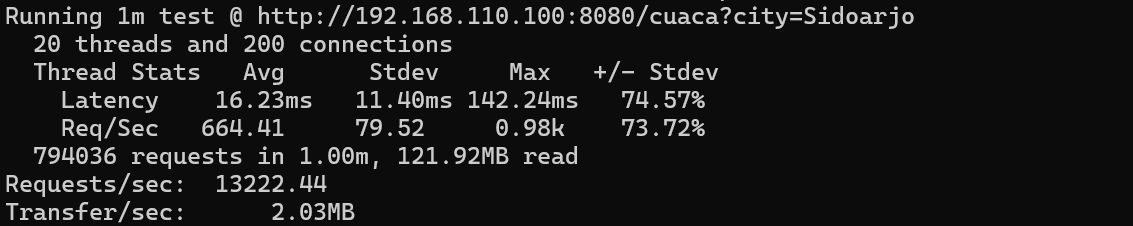

HTOP

random cities

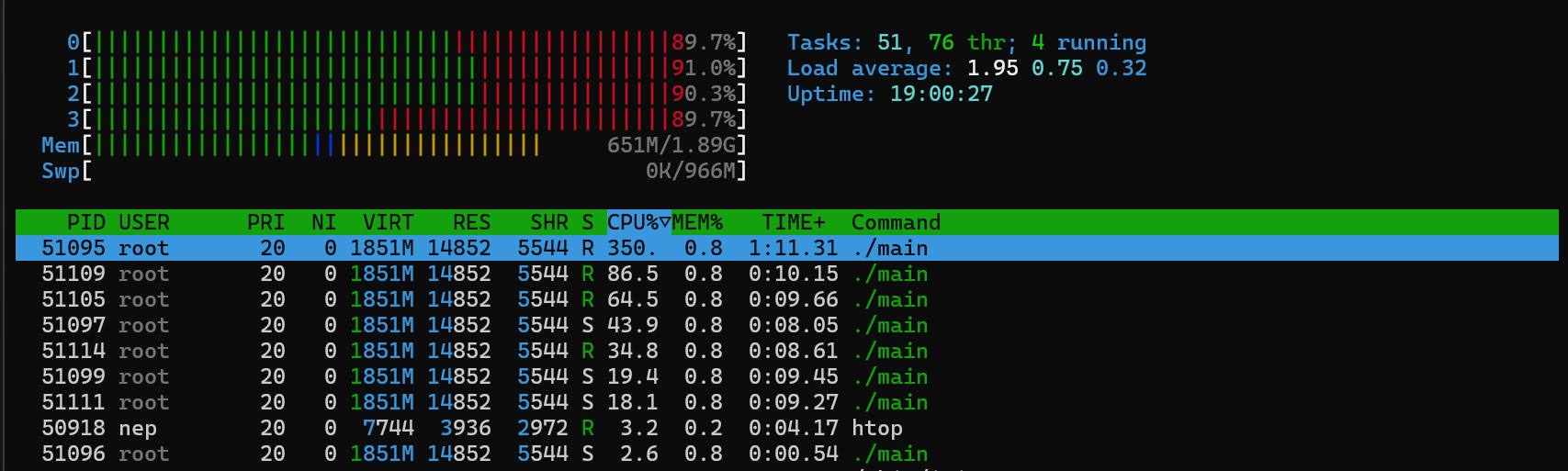

Running 1m test @ http://192.168.110.10:8080/cuaca

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 14.50ms 11.46ms 244.26ms 87.93%

Req/Sec 742.30 92.00 1.00k 75.75%

886055 requests in 1.00m, 136.16MB read

Requests/sec: 14756.26

Transfer/sec: 2.27MBfixed cities

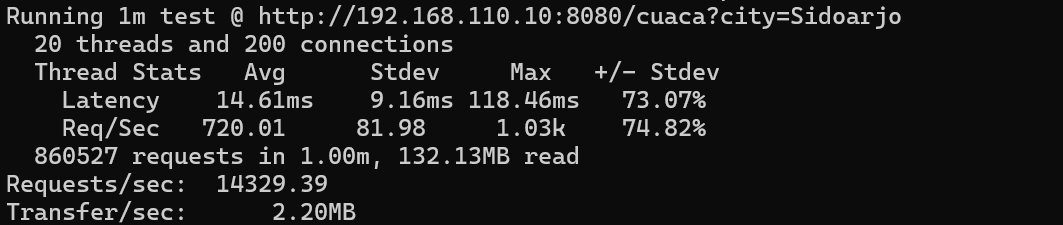

Running 1m test @ http://192.168.110.10:8080/cuaca?city=Sidoarjo

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 14.61ms 9.16ms 118.46ms 73.07%

Req/Sec 720.01 81.98 1.03k 74.82%

860527 requests in 1.00m, 132.13MB read

Requests/sec: 14329.39

Transfer/sec: 2.20MB| No | Server | Type | Total Request | Req/sec | Latency (AVG) |

|---|---|---|---|---|---|

| 1 | Main Server | Random Cities | 1825995 | 30415.26 | 6.61ms |

| 2 | Main Server | Fixed Cities | 1802833 | 30023.30 | 6.70ms |

| 3 | Second Instance | Random Cities | 813082 | 13541.81 | 15.89ms |

| 4 | Second Instance | Fixed Cities | 794036 | 13222.44 | 16.23ms |

| 5 | Third Instance | Random Cities | 886055 | 14756.26 | 14.50ms |

| 6 | Third Instance | Fixed Cities | 860527 | 14329.39 | 14.61ms |

Data at the table from the testing with wrk is surprise me, same hardware from Second and Third Instance is having contras different results, Third Instance with newer Ubuntu version is have a bigger total request with a difference of 72973 total requests newer OS could increase the performance of the application, interesting.

Reverse Proxy with Nginx Default Configuration

The configuration of nginx that used for reverse proxy to every upstream is using very-very basic things the reason is for straight comparison to KONG next, KONG will use also very basic configuration.

upstream backend {

server 192.168.110.123:8080; # will change to 110.123, 110.100, 110.10

}

server {

listen 80;

server_name nikko.id;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Improve performance

proxy_http_version 1.1;

proxy_set_header Connection "";

}

}-

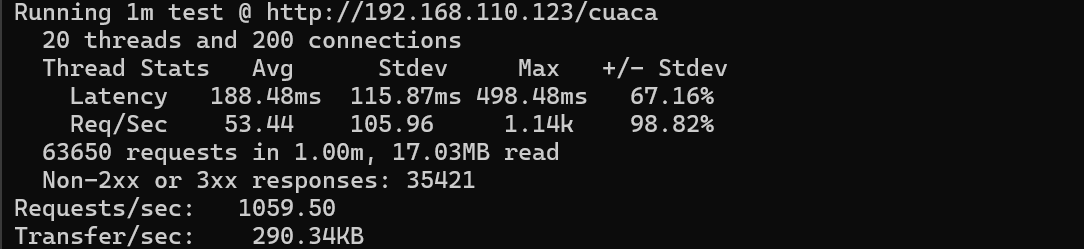

Nginx Main server to upstream golang main server

random cities

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 188.48ms 115.87ms 498.48ms 67.16%

Req/Sec 53.44 105.96 1.14k 98.82%

63650 requests in 1.00m, 17.03MB read

Non-2xx or 3xx responses: 35421

Requests/sec: 1059.50

Transfer/sec: 290.34KB-

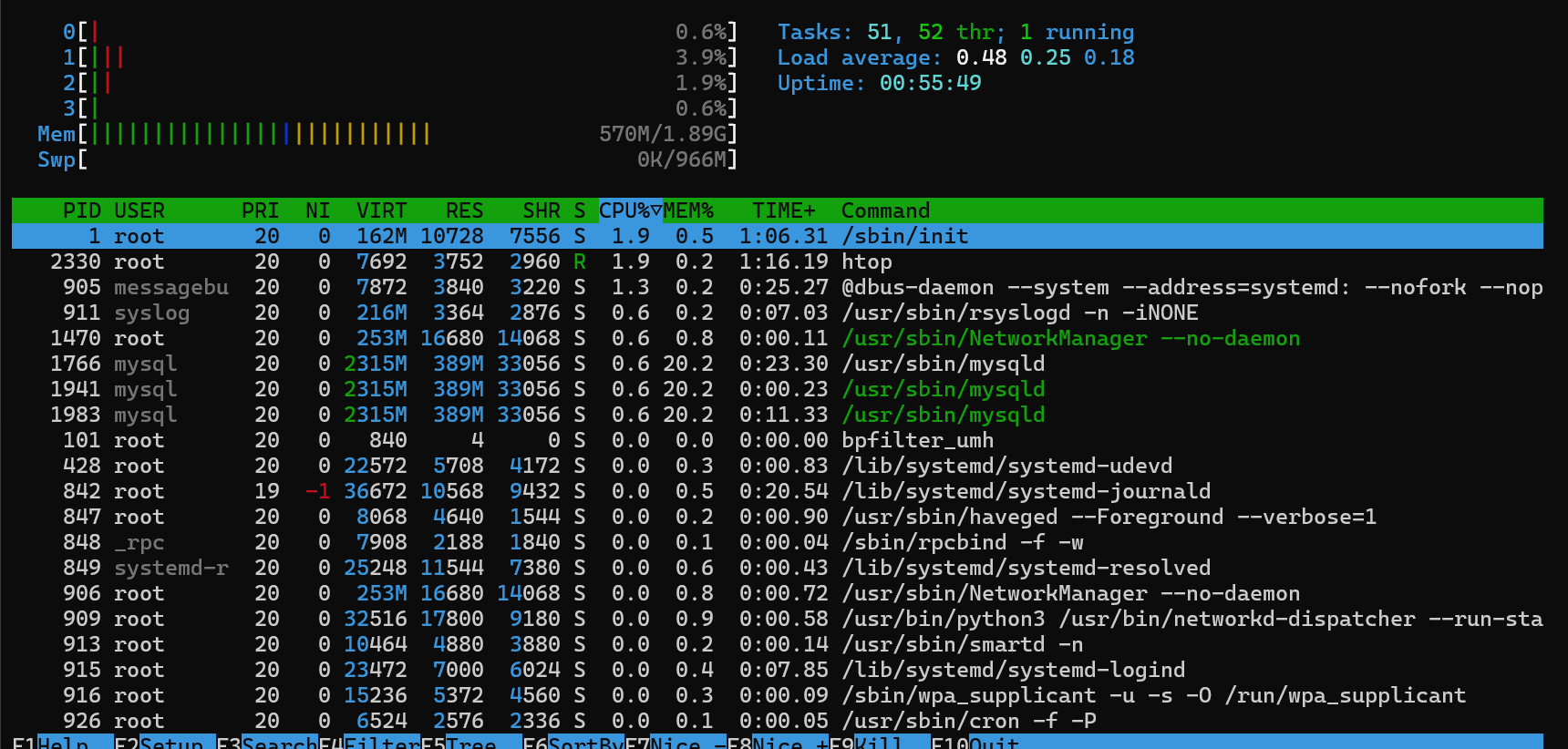

Nginx main server to upstream second instance

Server Second Instance

random cities

Running 1m test @ http://192.168.110.123/cuaca

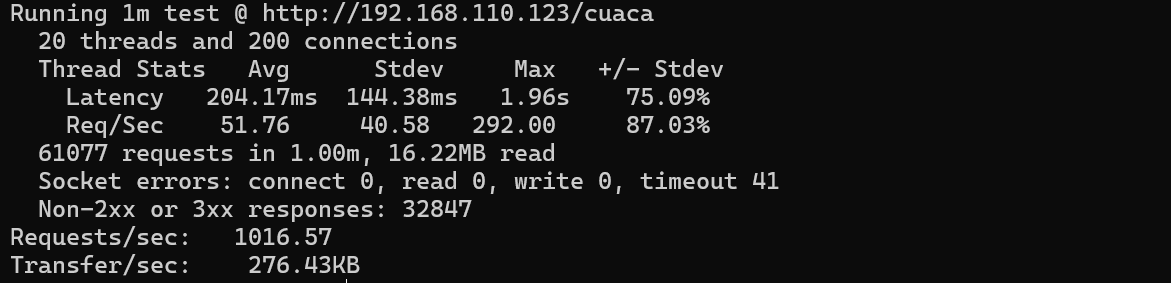

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 204.17ms 144.38ms 1.96s 75.09%

Req/Sec 51.76 40.58 292.00 87.03%

61077 requests in 1.00m, 16.22MB read

Socket errors: connect 0, read 0, write 0, timeout 41

Non-2xx or 3xx responses: 32847

Requests/sec: 1016.57

Transfer/sec: 276.43KB-

Nginx main server to upstream third instance

random cities

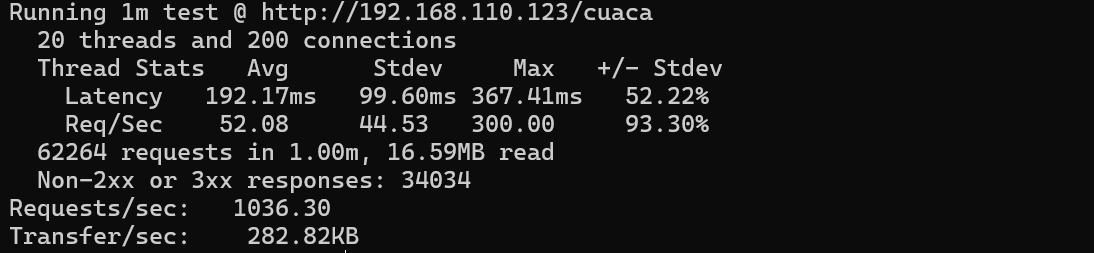

Running 1m test @ http://192.168.110.123/cuaca

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 192.17ms 99.60ms 367.41ms 52.22%

Req/Sec 52.08 44.53 300.00 93.30%

62264 requests in 1.00m, 16.59MB read

Non-2xx or 3xx responses: 34034

Requests/sec: 1036.30

Transfer/sec: 282.82KB

| No | Type | Total Request | Previous Total Request |

|---|---|---|---|

| 1 | Nginx main server to golang main server | 63650 | 1825995 |

| 2 | Nginx main server to golang second instance | 61077 | 813082 |

| 3 | Nginx main server to golang third instance | 62264 | 886055 |

All request with Reverse Proxy from main servers to all instance in average total 62331 it’s drop like ~96% ((highest total request - average nginx total request) / highest total request )* 100. ((1.825.995 - 62.331) / 1.825.995) × 100 ≈ 96,58% the main advantage using nginx it’s features like logging and more, also the recourse usage on every instance it’s not spiking since it’s first handled with the Nginx it self.

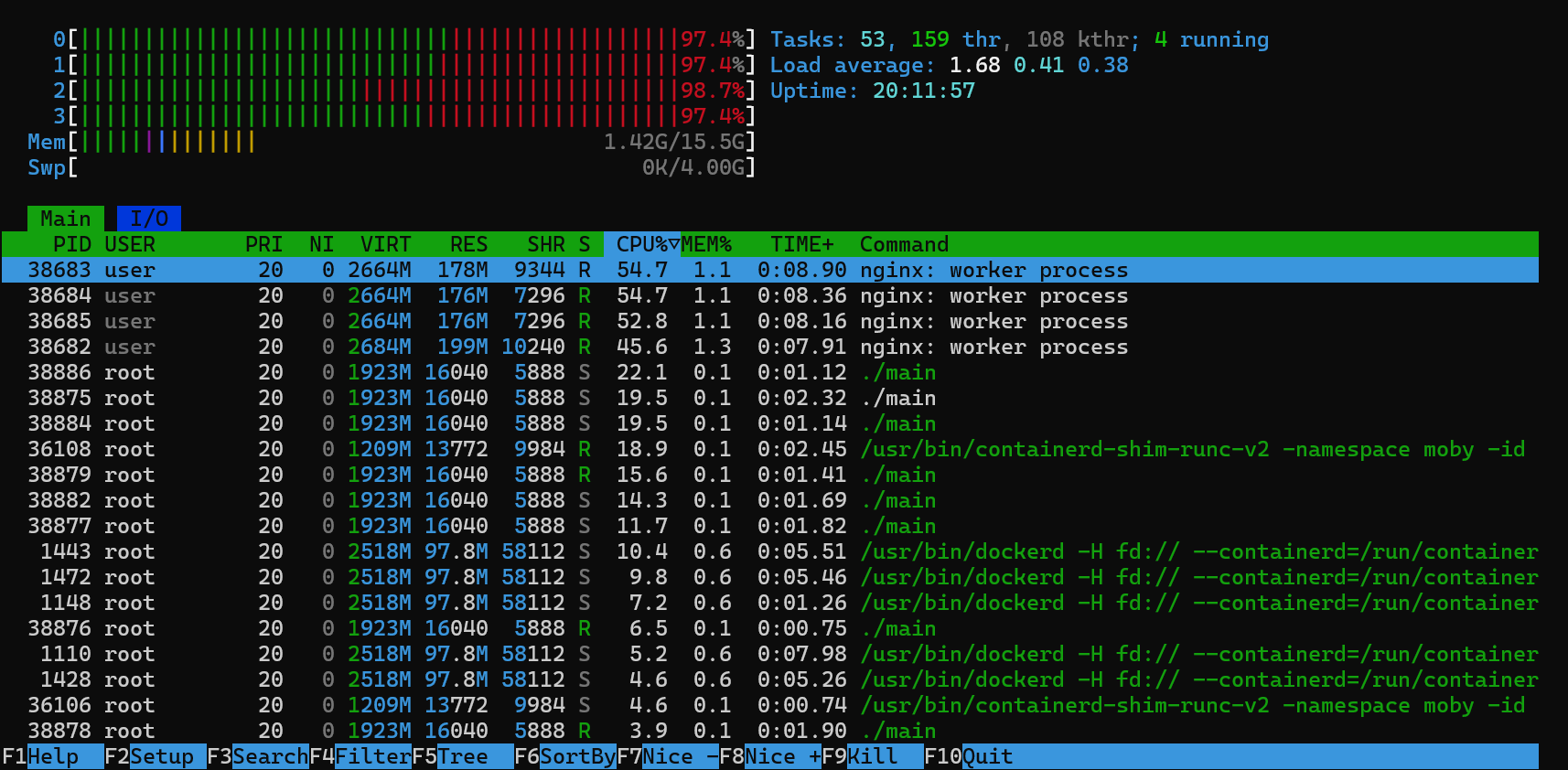

Reverse Proxy with KONG Default Configuration

Here in this testing methodology i will using dbless KONG installation at Docker and also using very basic configuration like previous testing using Nginx.

_format_version: "3.0"

services:

- name: my-backend-service

url: http://192.168.110.123:8080

routes:

- name: my-backend-route

paths:

- /api-

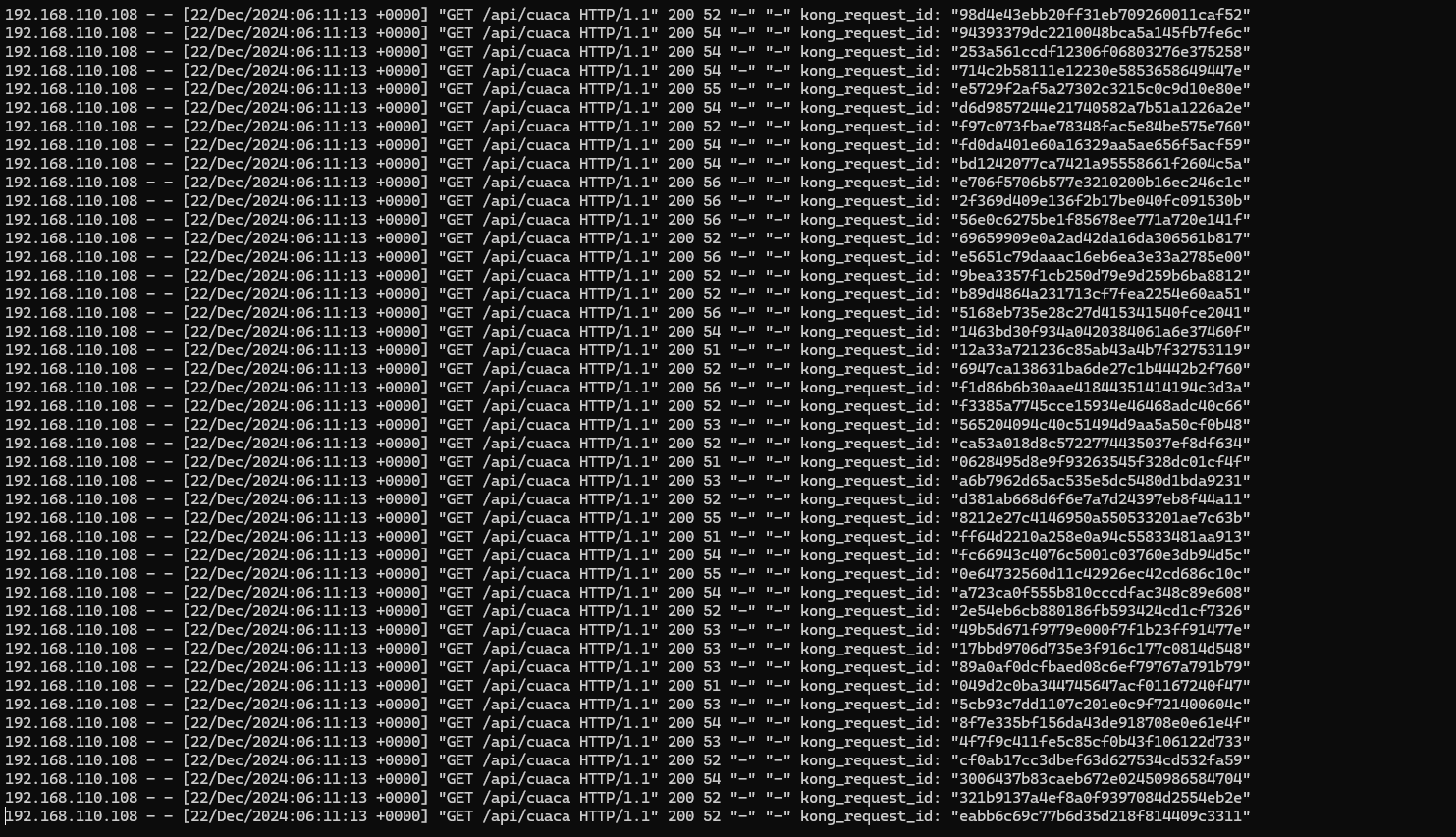

KONG Main server to upstream golang main server

random cities

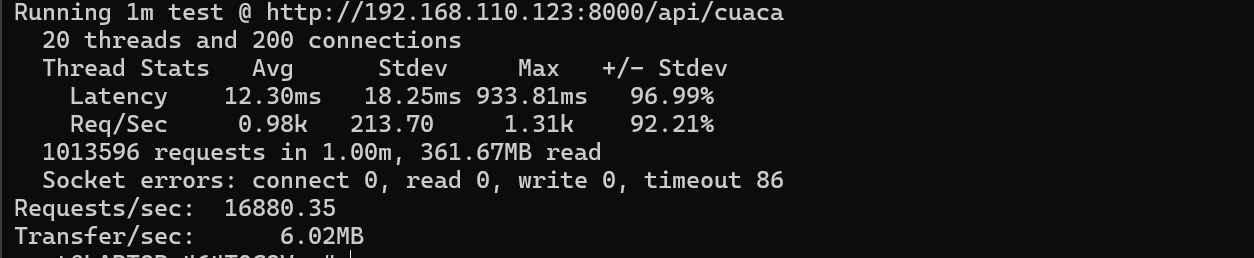

20 threads and 200 connections Thread Stats Avg Stdev Max +/- Stdev Latency 12.30ms 18.25ms 933.81ms 96.99% Req/Sec 0.98k 213.70 1.31k 92.21% 1013596 requests in 1.00m, 361.67MB read Socket errors: connect 0, read 0, write 0, timeout 86 Requests/sec: 16880.35 Transfer/sec: 6.02MB -

KONG Main server to upstream second instance

random cities

Running 1m test @ http://192.168.110.123:8000/api/cuaca

20 threads and 200 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 18.79ms 11.77ms 167.23ms 76.82%

Req/Sec 562.87 62.13 828.00 68.85%

672784 requests in 1.00m, 240.41MB read

Requests/sec: 11201.91

Transfer/sec: 4.00MB-

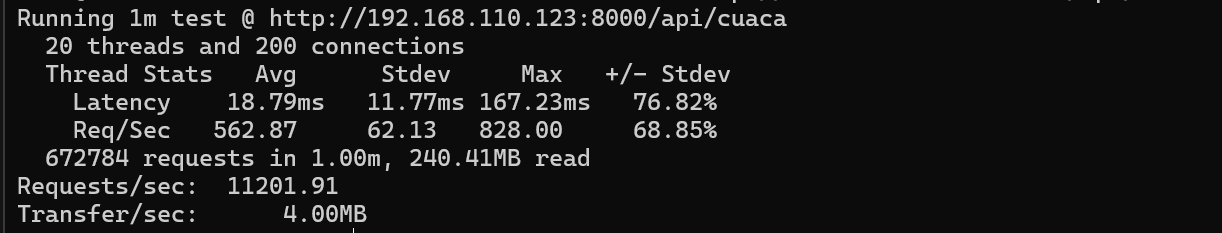

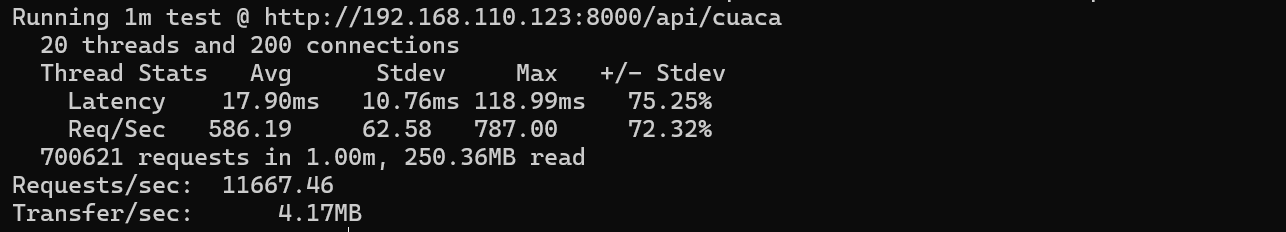

KONG Main server to upstream third instance

random cities

Running 1m test @ http://192.168.110.123:8000/api/cuaca 20 threads and 200 connections Thread Stats Avg Stdev Max +/- Stdev Latency 17.90ms 10.76ms 118.99ms 75.25% Req/Sec 586.19 62.58 787.00 72.32% 700621 requests in 1.00m, 250.36MB read Requests/sec: 11667.46 Transfer/sec: 4.17MB

| No | Type | Total Request | Previous Total Request |

|---|---|---|---|

| 1 | KONG main server to golang main server | 1013596 | 1825995 |

| 2 | KONG main server to golang second instance | 672784 | 813082 |

| 3 | KONG main server to golang third instance | 700621 | 886055 |

With KONG Reverse Proxy the total Request not the same average with the Nginx reverse proxy, the drops rate will calculated below

| No | KONG Total Request (now request) | Raw Total Request (previous) | Drop Rate in % |

|---|---|---|---|

| 1 Main | 1,013,596 | 1,825,995 | 44.49% |

| 2 Second | 672,784 | 813,082 | 17.26% |

| 3 Third | 700,621 | 886,055 | 20.93% |

Drop Rate in % = ((Previous - Now) / Previous) * 100

The drop rate is less than using average total of Nginx Reverse proxy, but spike in every server is quite same with raw http serving from the Golang Application it self. Advantage using KONG here is it same having HTTP Request Logging and the drop rates is less than using Nginx for performance purpose but the resource quite the same as raw http serving.

Load Balancing & Load Testing

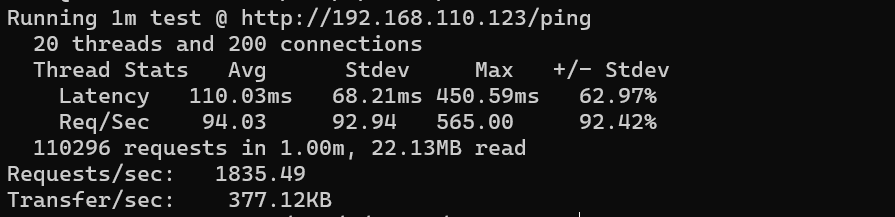

In this section I wanna testing how Nginx and KONG doing load balancing with method “Least Connection” to see how effective Nginx vs KONG to doing this kind of testing the goals of this testing is count how many upstream of each instance to return when hit the /ping endpoints.

To simulate the Load testing i using my custom python scripts to simulating same command with wrk to doing 20 threads, 200 connection with time execution in 1 minutes, why i need to custom codes? cause i need to track every response of endpoint /ping which upstream is responding to count it laters.

import threading

import requests

import time

from concurrent.futures import ThreadPoolExecutor

URL = "http://192.168.110.123/ping"

total_requests = 0

total_success = 0

total_failures = 0

def writeLog(data):

with open("log.txt", "a") as f:

f.write(data + "\n")

def make_request():

global total_requests, total_success, total_failures

try:

response = requests.get(URL, timeout=5)

if response.status_code == 200:

writeLog(response.text)

total_success += 1

else:

total_failures += 1

except requests.RequestException:

total_failures += 1

finally:

total_requests += 1

def main():

start_time = time.time()

end_time = start_time + 60

with ThreadPoolExecutor(max_workers=20) as executor:

while time.time() < end_time:

futures = [executor.submit(make_request) for _ in range(200)]

for future in futures:

future.result()

elapsed_time = time.time() - start_time

print(f"Total requests: {total_requests}")

print(f"Total successful requests: {total_success}")

print(f"Total failed requests: {total_failures}")

print(f"RPS (Requests Per Second): {total_requests / elapsed_time}")

if __name__ == "__main__":

main()and the counter of each ip and hostname dan appearance is with this python3 scripts, I’m using regex to match every response ip and hostname of the Load Balancer.

import re

hasil = {}

ip_regex = r"Halo dari hostname: (\w+) dengan IP: (\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3})"

with open("log.txt", "r") as file:

for baris in file:

match = re.search(ip_regex, baris)

if match:

hostname = match.group(1)

ip = match.group(2)

if hostname in hasil:

hasil[hostname]["count"] += 1

else:

hasil[hostname] = {"hostname": hostname, "ip": ip, "count": 1}

for nilai in hasil.values():

print(nilai)**NOPE! wrk supports the scripting to save log response body, here’s my scripts wrk with .lua to save response log to log.txt

local logfile = io.open("log.txt", "a")

response = function(status, headers, body)

logfile:write(body .. "\n")

logfile:flush()

end

done = function()

logfile:close()

endrun with

wrk -t 20 -c 200 -d 60 -s response_log.lua "ips"Nginx Load Balancing

upstream backend {

least_conn;

server 192.168.110.123:8080;

server 192.168.110.100:8080;

server 192.168.110.10:8080;

}

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}With that above configuration I’m save 3 instance of each upstream to variable upstream called backend with configuration least_conn

Total Request = 41460 + 33592 + 35244 = 110296

| Hostname | Request Count | Percentage (%) |

|---|---|---|

| hacking | 41460 | 38.21% |

| NepBig | 33592 | 30.95% |

| NepHack | 35244 | 32.48% |

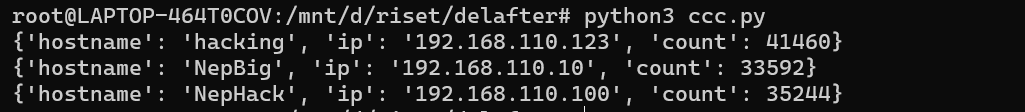

KONG Load Balancing

_format_version: "3.0"

_transform: true

upstreams:

- name: my-upstream

algorithm: least-connections

targets:

- target: "192.168.110.123:8080"

weight: 100

- target: "192.168.110.100:8080"

weight: 100

- target: "192.168.110.10:8080"

weight: 100

services:

- name: my-service

url: "http://my-upstream"

routes:

- name: my-route

paths:

- "/"

strip_path: true

service: my-service

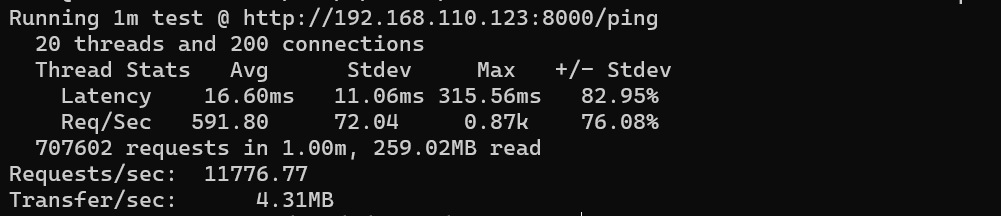

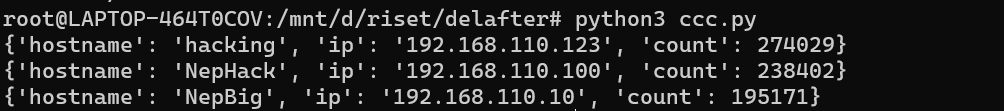

Total Request = 274029 + 238402 + 195171 = 707602

| Hostname | Request Count | Percentage (%) |

|---|---|---|

| hacking | 274029 | 38.84% |

| NepHack | 238402 | 33.69% |

| NepBig | 195171 | 27.58% |

After doing this 2 test Load Balancing with concepts Least Connection the KONG Load Balancing still have bigger total request than nginx total request the difference is like ~84.41% in KONG have bigger total requests.

The data distributed showing that hostname hacking or Main Server is receive more request than another upstream, it’s could be the main server have a bigger specification and response more faster than another upstream.

Both Nginx and KONG show that they are able to distribute the load across all upstream, although the distribution to every upstream not completely balance but personally I’m satisfy with this results, Main server should have bigger request since the specification is much better than another.

Closing Statement

From this 2 type of this testing Load Test Reverse Proxy and Load Test Load Balancing personally i have new point of view, personally i more like the KONG Service to handle the Reverse Proxy and Load Balancing things, but yeah it’s a short conclusion from Junior Engineer like me but the data already talks.

In future i will explore more about KONG at the production ready project to understand pro and cons of using it, this type of testing maybe not showing much data about the real production scenario about the best practice of KONG it self.

KONG features is impressive in my Junior Engineer pov the configuration applied doesn’t need to restart the service like nginx when the configuration changing this very point plus for me, configuration syntax yml of KONG is quite easy to understand also.

So thank you for reading this article once again this is my playground to practice my English writing skill. Sorry if my explanation in this article doesn’t full fill what you looking at or even my explanation above is wrong in you PoV, please tell me how the right things i could do it chat me at @rainysm (telegram)